- Category

- >Machine Learning

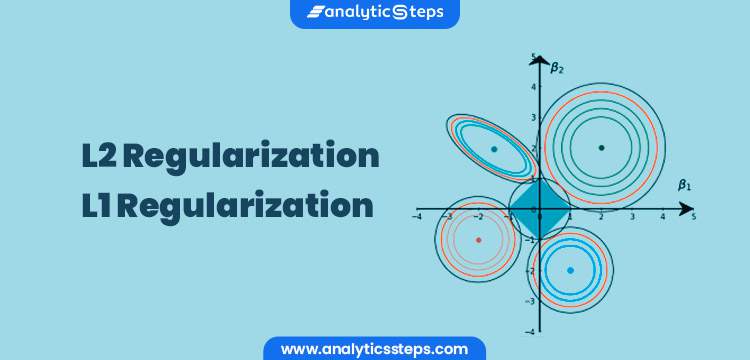

L2 and L1 Regularization in Machine Learning

- Neelam Tyagi

- Mar 01, 2021

In supervised machine learning, the ML models get trained training data and there are the possibilities that the model performs accurately on training data but fails to perform well on test data and also produces high error due to several factors such as collinearity, bias-variance impact and over modeling on train data.

For example, when the model learns signals as well as noises in the training data but couldn’t perform appropriately on new data upon which the model wasn’t trained, the condition/problem of overfitting takes place.

Overfitting simply states that there is low error with respect to training dataset, and high error with respect to test datasets.

Various methods can be adopted, for avoiding overfitting of models on training data, such as cross-validation sampling, reducing number of features, pruning, regularization and many more.

As our focus today on overfitting and regularization and its respective techniques, we start simply by learning the concept of regularization and its significant aspect for overfitting.

The blog covers main topics as;

-

Bias-Variance Tradeoff

-

Understanding Regularization

-

What is L1 Regularization?

-

What is L2 Regularization?

-

L2 vs L1 Regularization

Bias-Variance Tradeoff

In order to understand how the deviation of the function is varied, bias and variance can be adopted. Bias is the measurement of deviation or error from the real value of function, variance is the measurement of deviation in the response variable function while estimating it over a different training sample of the dataset.

Therefore, for a generalized data model, we must keep bias possibly low while modelling that leads to high accuracy. And, one should not obtain greatly varied results from output, therefore, low variance is recommended for a model to perform good.

The underlying association between bias and variance is closely related to the overfitting, underfitting and capacity in machine learning such that while calculating the generalization error (where bias and variance are crucial elements) increase in the model capacity can lead to increase in variance and decrease in bias.

The trade-off is the tension amid error introduced by the bias and the variance.

Bias-Variance Tradeoff

From the graph, it can be observed that;

-

While reducing bias, the model fits exactly well on a particular sample of training data, and is unable to find the basic patterns in the dataset that it has never trained. So the model can have deviated outcomes while trained on another sample, and hence produce high variance.

-

Similarly, if willing to keep minor deviation or low variance when distinct samples datasets are used, then the model would not fit exactly on data points that lead to high bias.

See the graph below, where the conditions of underfitting, exact fit, and overfitting can be observed.

Graphical representation of underfitting, exact fitting and overfitting

Below are the examples (specific algorithms) that shows the bias variance trade-off configuration;

-

The support vector machine algorithm has low bias and high variance, but the trade off may be altered by escalating the cost (C) parameter that can change the quantity of violation of the allowed margin in the training data which decreases the variance and increases the bias.

-

The k-nearest neighbor algorithm has low bias and high variance, here the trade-off can be modified through extending the k-value that further increases the number of neighbors contributing in the predictions which consequently increases the bias of the model.

Now, what’s more? We need to focus here that the while modeling the data, a situation of low bias and high variance is termed as overfitting such that the model fits certainly well with high accuracy on available data and when it sees new data it fails to predict accurately that yield high test error.

It often happens when the data has several numbers of features, and the model takes the contribution of all estimated coefficients into consideration and attempts to overestimate the actual value.

In contrast to this, the significant fact is only few features are important in the dataset and impact the prediction.

Therefore, if the insignificant features are huge in number, they can add value to the function in training data, but when the new data comes up that are no connections with these features, the predictions are misinterpreted.

So it becomes very important to confine the features to minimizing the plausibility of overfitting while modeling, and hence the process of regularization is preferred.

What is Regularization

In regression analysis, the features are estimated using coefficients while modelling. Also, if the estimates can be restricted, or shrinked or regularized towards zero, then the impact of insignificant features might be reduced and would prevent models from high variance with a stable fit.

Regularization is the most used technique to penalize complex models in machine learning, it is deployed for reducing overfitting (or, contracting generalization errors) by putting network weights small. Also, it enhances the performance of models for new inputs.

(Also check: Machine learning algorithms)

In simple words, it avoids overfitting by panelizing the regression coefficients of high value. More specifically, It decreases the parameters and shrinks (simplifies) the model. This more streamlined model will aptly perform more efficiently while making predictions.

Since, it makes the magnitude to weighted values low in a model, regularization technique is also referred to as weight decay.

Moreover, Regularization appends penalties to more complex models and arranges potential models from slightest overfit to greatest. Regularization assumes that least weights may produce simpler models and hence assist in avoiding overfitting.

The model with the least overfitting score is accounted as the preferred choice for prediction.

In general, regularization is adopted universally as simple data models generalize better and are less prone to overfitting. Examples of regularization, included;

-

K-means: Restricting the segments for avoiding redundant groups.

-

Neural networks: Confining the complexity (weights) of a model.

-

Random Forest: Reducing the depth of tree and branches (new features)

There are various regularization techniques, some well-known techniques are L1, L2 and dropout regularization, however, during this blog discussion, L1 and L2 regularization is our main course of interest.

Regularization Term

Both L1 and L2 can add a penalty to the cost depending upon the model complexity, so at the place of computing the cost by using a loss function, there will be an auxiliary component, known as regularization terms, added in order to panelizing complex models.

By adding regularization term, the value of weights matrices reduces by assuming that a neural network having less weights makes simpler models. And hence, it reduces the overfitting to a certain level.

(Must read: Machine learning tools)

Penalty Terms

Through biasing data points towards specific values such as very small values to zero, Regularization achieves this biasing by adding a tuning parameter to strengthen those data points. Such as;

-

L1 regularization: It adds an L1 penalty that is equal to the absolute value of the magnitude of coefficient, or simply restricting the size of coefficients. For example, Lasso regression implements this method.

-

L2 Regularization: It adds an L2 penalty which is equal to the square of the magnitude of coefficients. For example, Ridge regression and SVM implement this method.

-

Elastic Net: When L1 and L2 regularization combine together, it becomes the elastic net method, it adds a hyperparameter.

What is L1 Regularization?

L1 regularization is the preferred choice when having a high number of features as it provides sparse solutions. Even, we obtain the computational advantage because features with zero coefficients can be avoided.

The regression model that uses L1 regularization technique is called Lasso Regression.

Mathematical Formula for L1 regularization

For instance, we define the simple linear regression model Y with an independent variable to understand how L1 regularization works.

For this model, W and b represents “weight” and “bias” respectively, such as

W= w1, w2, w3, ......... wn

And,

b=b1, b2, b3, ......... bn

And Ŷ is the predicted result such that

Ŷ= w1 x1 +w2 x2 +......+wn xn, + b

The below function calculates an error without the regularization function

Loss= Error (Y, Ŷ)

And function that can calculate the error with L1 regularization function,

Where 𝝺 is called the regularization parameter and 𝝺> 0 is manually tuned. Also, 𝝺=0 then the above loss function acts as Ordinary Least Square where the high range value push the coefficients (weights) 0 and hence make it underfits.

Now |w| is only differentiable everywhere except when w=0 as shown below;

Substituting the formula of Gradient Descent optimizer for calculating new weights;

Putting the L1 formula in the above equation;

From the above formula, we can say that;

-

When w is positive, the regularization parameter (λ > 0) will make w to be least positive, by deducting λ from w.

-

When w is negative, the regularization parameter (λ < 0) will make w to be little negative, by summing λ to w.

(Recommend blog: Dijkstra’s Algorithm: The Shortest Path Algorithm)

What is L2 regularization?

L2 regularization can deal with the multicollinearity (independent variables are highly correlated) problems through constricting the coefficient and by keeping all the variables.

L2 regression can be used to estimate the significance of predictors and based on that it can penalize the insignificant predictors.

A regression model that uses L2 regularization techniques is called Ridge Regression.

Mathematical Formula for L2 regularization

For instance, we define the simple linear regression model Y with an independent variable to understand how L2 regularization works.

For this model, W and b represents “weight” and “bias” respectively, such as

W= w1, w2, w3, ......... wn

And,

b=b1, b2, b3, ......... bn

And Ŷ is the predicted result such that

Ŷ= w1 x1 +w2 x2 +......+wn xn, + b

The below function calculates an error without the regularization function

Loss= Error (Y, Ŷ)

And function that can calculate the error with L2 regularization function,

Here, 𝝺 is known as Regularization parameter, also if the lambda is zero, this again would act as OLS, and if lambda is extremely large, it leads to adding huge weights and yield as underfitting.

Substituting the formula of Gradient Descent optimizer for calculating new weights;

Putting the L2 formula in the above equation;

(Related blog: Lisso, Ridge and Elastic Net Regression in Machine Learning)

L2 vs L1 Regularization

It is often observed that people get confused in selecting the suitable regularization approach to avoid overfitting while training a machine learning model.

Among many regularization techniques, such as L2 and L1 regularization, dropout, data augmentation, and early stopping, we will learn here intuitive differences between L1 and L2 regularization.

-

Where L1 regularization attempts to estimate the median of data, L2 regularization makes estimation for the mean of the data in order to evade overfitting.

-

Through including the absolute value of weight parameters, L1 regularization can add the penalty term in cost function. On the other hand, L2 regularization appends the squared value of weights in the cost function.

-

As defined, sparsity is the characteristic of holding highly significant coefficients, either very close to zero or not very close to zero, where in general coefficients approaching zero would be eliminated later.

And the feature selection is the in-depth of sparsity, i.e. in place of confining coefficients nearby to zero, feature selection is brought them exactly to zero, and hence expel certain features from the data model.

In this context, L1 regularization can be helpful in features selection by eradicating the unimportant features, whereas, L2 regularization is not recommended for feature selection.

-

L2 has a solution in closed form as it’s a square of a weight, on the other side, L1 doesn’t have a closed form solution since it includes an absolute value and it is a non-differentiable function.

Due to this reason, L1 regularization is relatively more expensive in computation, it can’t be solved in the context of matrix measurement and heavily relies on approximations.

L2 regularization is likely to be more accurate in all the circumstances, however, at a much higher level of computational costs.

(Visit also: Linear Discriminant Analysis (LDA) in Supervised Learning)

The table below shows the summarized differences between L1 and L2 regularization;

|

S.No |

L1 Regularization |

L2 Regularization |

|

1 |

Panelizes the sum of absolute value of weights. |

penalizes the sum of square weights. |

|

2 |

It has a sparse solution. |

It has a non-sparse solution. |

|

3 |

It gives multiple solutions. |

It has only one solution. |

|

4 |

Constructed in feature selection. |

No feature selection. |

|

5 |

Robust to outliers. |

Not robust to outliers. |

|

6 |

It generates simple and interpretable models. |

It gives more accurate predictions when the output variable is the function of whole input variables. |

|

7 |

Unable to learn complex data patterns. |

Able to learn complex data patterns. |

|

8 |

Computationally inefficient over non-sparse conditions. |

Computationally efficient because of having analytical solutions. |

Conclusion

In order to prevent overfitting, regularization is most-approaches mathematical technique, it achieves this by panelizing the complex ML models via adding regularization terms to the loss function/cost function of the model.

-

L1 regularization gives output in binary weights from 0 to 1 for the model’s features and is adopted for decreasing the number of features in a huge dimensional dataset.

-

L2 regularization disperse the error terms in all the weights that leads to more accurate customized final models.

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments

wkenmomo

Oct 12, 2021What do you mean by "closed form solution"? What is the equation that is being solved?

wkenmomo

Oct 12, 2021(press enter somehow posted the comment). Also how is L1 harder to differentiate than L2? L1's derivative is the logical operator of w>0 while L2 is 2*w. Are you suggesting that floating point operation is (much) faster than integer logic operation?

Rasha Chaudhary

Nov 17, 2022I am not able to load formula images... Pls help

ruhulbait9205d40e3ebb43e1

Aug 20, 2023The image is not opening. Please look into this

Carmelo

Feb 01, 2024The article is interesting but the images won't load. Can someone fix this problem please?