- Category

- >Deep Learning

MakeItTalk: Speaker-Aware Talking Head Animation

- Neelam Tyagi

- May 17, 2020

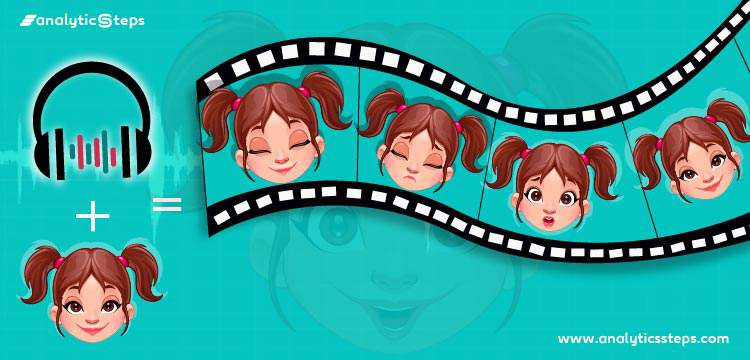

This is really interesting, only single audio and a single facial image as input, expressive talking heads can be developed as output. A new method with a deep architecture makes it possible, Yang Zhou, a Ph.D. scholar, and his team have explored this method and named it “MakeItTalk”.

In this blog, you will get a preliminary trek to;

-

What is a Deep Learning Architecture?

-

What actually is MakeItTalk?

-

Which method is used?

-

Conclusion

Introduction

Expressive animated talking heads is a crucial technology in various fields like making movies, streaming of videos (Read our blog on data handling in Netflix), computer games, virtual avatars, etc.

Although, creating realistic facial animation lasts a defy in the world of computer graphics regardless of the myriad achievements in technologies.

Firstly, let’s see the prior research (challenges in producing talking heads) that drive to work more on different methods(models) that can generate facial expressions;

-

Simultaneity is arduous to obtain manually between facial and speech motion as facial dynamics prevail on high-dimensional multiplexes that makes it significant to identifying a mapping from audio or voice speech

-

Multiple talking heads show different talking patterns that express different personalities, also controlling lips coinciding, and facial animations are insufficient to understand the authenticity of talking heads.

An example of speaker-aware talking head

So, the whole facial expression incorporates the correlation between complete facial components where head posture also acts as a crucial role.

To address the above flaws, a new method “MakeItTalk” with deep architecture is proposed.

What is a Deep Learning Architecture?

The mother art is architecture. Without an architecture of our own, we have no soul of our own civilization. -Frank Lloyd Wright

Deep learning is broadly used machine learning techniques that have gained huge success in several applications such as image detection, pattern recognition, anomaly detection, and natural language processing.

Deep learning architectures are the emergence of multilayer neural networks incorporating distinctive design and training approaches to make them competitive.

Deep learning architectures are created by the composition of multiple linear and non-linear transformations of data, it has formed with the aim to yield more conceptual and eventually more purposeful representations.

These methods have recently become notorious when performed exceptionally well in various computer vision and pattern recognition problems.

Additionally, Deep learning architectures have transformed the analytical panorama for big data amid the huge-scale implementation of sensory networks that enhanced communication. Don’t you want to know how you do?

List of popular Deep Learning Architectures along with their application is provided in the following table;

|

S.No |

Name of Deep Architecture |

Applications |

|

1 |

Speech recognition, Handwriting recognition |

|

|

2 |

Image recognition, Video analysis, Natural Language processing |

|

|

3 |

DSN |

Continuous speech recognition, Information retrieval |

|

4 |

Handwriting recognition, Speech recognition, Gesture recognition, Natural Language text compression, Image captioning |

|

|

5 |

DBN |

Image recognition, Information retrieval, Failure Prediction, Natural Language understanding |

What Actually is MakeItTalk?

In reference to a published research paper,

Yang Zhou reported that at the time of testing, the method is able to create fresh audio clips and images that have not been seen during the training of the model.

An exact illustration of Yang Zhou experiment

In summary, MakeItTalk is;

-

A new deep learning-based architecture that anticipates facial landmarks, jaw, head posture, eyebrows, nose, and picks up lips only from voice beckons.

-

It can also make animation including facial expressions and head movements depending on the unwinding voice or speech content and how a speaker performed or how the image of a speaker is presented.

Essentially, the main reach of this approach is to detach the content and speaker in the input audio signals and extract the animation from the producing extricated representations.

The content is further used for the strong simultaneity of lips and adjacent facial parts. The information of a speaker is deployed for picking up the other facial expressions and head movements that are imperative for generating expressive head animations.

This is the official video of “MakeItTalk” that describes the generating speaker-aware head animation with various output expressions.

It is illustrated in the video that how (with) an imaginary audio voice beacon and single invisible portrait image as input, speaker-aware talking head animations can be generated as output. This model can produce both photorealistic human face images and non-photorealistic cartoon images.

Which Method is Used?

The below diagram shows the complete method and approach that is implemented to generate expressive talking heads, below is the description of the working approach;

-

An audio clip and a single facial image can produce a speaker-aware talking head animation coordinated with the audio.

-

Under the training phase, a ready-made face landmark detector is used in order to preprocess the input videos to withdraw the landmarks, a basic level model can be trained from input audio that animates speech content and pulls up landmarks precisely.

-

Estimation of landmarks is examined from disconnected content and speaker embedding of the input audio signal in order to obtain high accuracy motion. For this, a voice conversion neural network is adopted to extricate the speech content and to discover the insights.

Pipeline of MakeItTalk, Pic credit

-

The content is speaker-agnostic and catches the common movements of lips and adjacent parts where the existence of the speaker regulates the features of motion and the leftover of the talking head motions. For example, whoever says “Hi”, the lips are likely to be wide up which is speaker-agnostic as formulated by the content.

-

The correct size and shape of the lips widening along with the movements of eyes, nose, and head rely on the who says the word, i.e. identity. Deep Model results in a series of envisioned landmarks for the audio provided via learned on the basis of content and speaker-character information.

-

At last, in order to make converted images, two algorithms are exploited for the landmark-to-image synthesis; (a) For the non-photorealistic images such as canvas arts or vector arts, a particular image distortion method is deployed on the basis of Delaunay triangulation, and (b) For photorealistic images, an image-to-image translation network (identical to pix2pix) is constructed to animate the given natural human face with elemental landmark prognosis. (reference blog: What is Deepfake Technology and how it may be harmful?)

Blending all the frames of images and the input audio jointly provides the decisive and eventual talking head animations.

Conclusion

There are numerous architectures and algorithms that are widely implemented in deep learning; these classes of algorithms and topologies can be applied to a broad spectrum of problems. Noticeably, LSTM and CNN are two of the primeval methods, also two of the most used in various applications. The same is used in “MakeItTalk”.

Now at the end of the blog, you came to know a fascinating and augmented deep learning-based architecture method that can create speaker-aware talking head animations from an audio clip along with a single image portrait. This approach can control new audio clips and new pictures that are not seen during the training of the model.

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments