- Category

- >Deep Learning

What are Skip Connections in Neural Networks?

- Bhumika Dutta

- Sep 20, 2021

Introduction

Before starting with anything related to neural networks, we need to have our concepts clear about deep learning. Deep learning is a field of study under machine learning that deals with algorithms that are inspired by the structure and functioning of the brain. These are called Artificial Neural Networks. Neural Networks are mostly inspired by the human brain and mimic the way biological neurons send signals to each other.

With the abundance of technology available today, there are many applications that can be performed using Deep learning algorithms. One such application is a “skip connection”. But, to understand the mechanism of a skip connection, one needs to learn about backpropagation.

-

Backpropagation is the study of how altering the weights (parameters) in a network affects the loss function by computing partial derivatives. It minimizes the loss function of any design by changing network settings.

-

The chain rule is generally used to describe the gradient of a loss function with respect to some neural network parameters that are functions of a previous layer parameter. Backpropagation utilizes partial derivative multiplication to transmit the gradient to the previous layer (as in the chain rule).

(Must read: Different loss functions in Neural Networks)

In general, multiplication with an absolute value smaller than one is desirable since it offers some feeling of training stability, however, there is no formal mathematical theory governing this. However, we can see that as we travel backward in the network, the gradient of the network becomes smaller and lower. This is where skip connections come to the rescue.

In this article, we are going to analyze and explore the following topics:

-

What are skip connections?

-

What are the different types of skip connections?

Let us proceed towards the first topic and understand what skip connections are.

Skip connections

As the name suggests, the skip connections in deep architecture bypass some of the neural network layers and feed the output of one layer as the input to the following levels. It is a standard module and provides an alternative path for the gradient with backpropagation.

Skip Connections were originally created to tackle various difficulties in various architectures and were introduced even before residual networks. In the case of residual networks or ResNets, skip connections were used to solve the degradation problems, and in the case of dense networks or DenseNets, it ensured feature reusability.

According to this example explained by Analytics Vidhya, Skip connections with gates regulated and learned the flow of information to deeper levels in Highway Networks (Srivastava et al.). This notion is comparable to the LSTM gating mechanism. Although ResNets is a subset of Highway networks, its performance falls short when compared to ResNets.

As mentioned earlier, skip connections solve degradation problems. But what are degradation problems exactly? When developing deep neural nets, the model's performance degrades as the architecture's load increases. This is referred to as the degradation problem. The major reasons for the degradation problem are overfitting or the depth of the model in general because a model with more layers has more training errors compared to the shallower models. Another explanation might be disappearing gradients and/or exploding gradient issues.

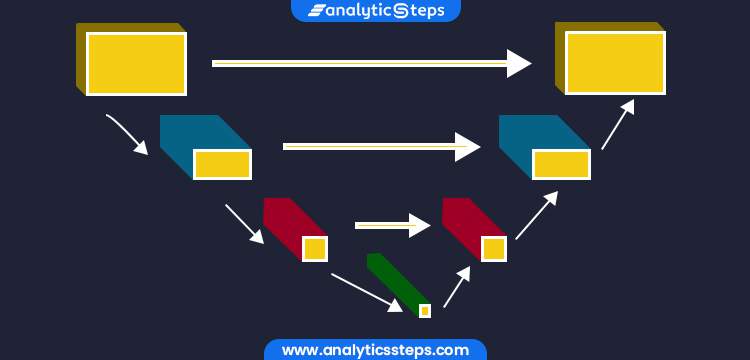

Let us consider a shallow neural network that has been trained on a collection of data and a deeper network in which the beginning layers have the same weight matrices as the shallow network, as depicted by the blue colored layers in the diagram below, but with some more layers added (green colored layers). The weight matrices of the additional layers are configured to be identity matrices (identity mappings).

Diagram showing shallow and deep network (source)

Because we are employing the shallow model's weight in the deeper network with extra identity layers, the deeper network should not yield any more training error than its shallow equivalent. However, tests show that the deeper network has a higher training error than the shallow one. This refers to deeper levels' failure to learn even identity mappings. Thus, skip connections are used to train these deep neural networks.

(Suggested blog: What is Stochastic Gradient Descent?)

Types of Skip Connections

Generally, skip connections can be used through the non-sequential layer in two ways: addition and concatenation. Let us learn about the two types of skip connections that use these two ways.

-

Residual Networks (ResNets): skip connections using addition:

He et al. presented Residual Networks in 2015 to tackle the picture classification challenge. The data from the early layers are transferred to deeper layers in ResNets through matrix addition, hence the backpropagating is done through addition.

Because the output from the preceding layer is added to the layer ahead, this operation requires no extra parameters. The main mechanism of ResNets is to stack the skip residual blocks together and use an identity function to preserve the gradient. A residual block with skip connection will look like the diagram below:

A residual block with skip connections (source)

We can represent the residual block and calculate the gradient if the loss function equals to:

The formula of the loss function (source)

Due to the deeper layer representation of ResNets, the pre-trained weights of the network can be used to solve several tasks. It is not only restricted to image classification, but it can also address a broad variety of image segmentation, keypoint identification, and object recognition problems. As a result, ResNet is regarded as one of the most important designs in the deep learning community.

(Related blog: Top Classification Algorithms Using Python)

-

Densely Connected Convolutional Networks (DenseNets): skip connections using concatenation:

Low-level information is exchanged between the input and output, and it would be preferable to transfer this information directly across the network. Concatenation of prior feature maps is another method for achieving skip connections.

DenseNet is the most well-known deep learning architecture. Huang et al. suggested DenseNets in 2017. The primary distinction between ResNets and DenseNets is that DenseNets concatenates the layer's output feature maps with the next layer rather than summarising them.

Let us look at the 5-layer dense block depicting feature reusability using concatenation:

5-layer dense block (source)

The aim of concatenation is to leverage characteristics acquired in previous levels in deeper layers as well. This is referred to as Feature Reusability. As there is no need to learn redundant maps, DenseNets may learn mapping with fewer parameters than a standard Convolutional Neural Network. The concatenation leads to a huge amount of feature channels on the last layers of the network and extreme future reusability.

Other than the two types of skip connections mentioned above, there are a few other skip connections worth mentioning. Before introducing additive skip connections in any deep learning model, we have to be careful as apart from the specified channel dimension, the dimensionality must be the same in addition and concatenation. There are two kinds of setups where additive skip connections are used: Short skip connections and Long skip connections.

Short skip connections are often employed in conjunction with consecutive convolutional layers that do not modify the input dimension, such as ResNet, whereas lengthy skip connections are typically seen in encoder-decoder architectures. It is well understood that global information (image shape and other statistics) resolves “what”, whereas local information resolves “where” (small details in an image patch).

Long skip connections are common in symmetrical designs, where temporal dimensionality is lowered in the encoder and subsequently increased in the decoder, as seen below. Transpose convolutional layers in the decoder can be used to enhance the dimensionality of a feature map. The transposed convolution process creates the same connectivity as the regular convolution operation, but in the other direction.

Now that we understand what long skip connections are, let us know more about U-Net, which is a long skip connection used in the biomedical field. Ronneberger et al. suggested U-Nets for biological picture segmentation. It has an encoder-decoder section that includes Skip Connections. The entire architecture resembles the English letter "U," thus it is named such. It is used for tasks with the same spatial dimension as the input, such as picture segmentation, optical flow estimation, video prediction, and so on.

(Recommended blog: Applications of Neural Networks)

Conclusion

The reason for these skip connections is that they provide an unbroken gradient flow from the first to the final layer, therefore addressing the vanishing gradient problem. Concatenative skip connections, which are commonly utilized, provide an alternate approach to assure feature reusability of the same dimensionality from prior layers.

(Also read: 5 Clustering Methods and Applications)

To recover spatial information lost during downsampling, lengthy skip connections are utilized to transmit features from the encoder path to the decoder path. In deep architectures, short skip connections appear to stabilize gradient updates.

Finally, skip connections allow for feature reuse while also stabilizing training and convergence. Thus in this article, we have learned about skip connections and the types of skip connections available.

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments