- Category

- >Machine Learning

What is LightGBM Algorithm, How to use it?

- Rohit Dwivedi

- Jun 26, 2020

Machine Learning is a rapidly growing field. There are many different algorithms that are used in machine learning today. I am introducing you to one such new algorithm that is “LightGBM” as it is a new algorithm and there are not many resources so that one can understand the algorithm.

In this blog, I would try to be specific and keep the blog small explaining to you how you can make use of the LightGBM algorithm for different tasks in machine learning. If you will go through the documentation of LightGBM then you will find there are many numbers of parameters that are given there and one can easily get confused about using the parameter. I will try to make such things easy for you.

What is LightGBM?

It is a gradient boosting framework that makes use of tree based learning algorithms that is considered to be a very powerful algorithm when it comes to computation. It is considered to be a fast processing algorithm.

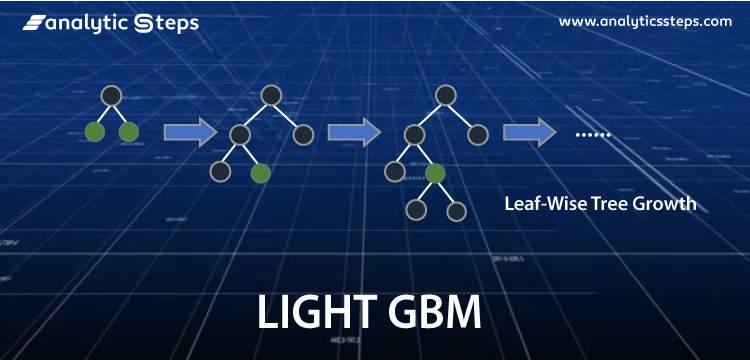

While other algorithms trees grow horizontally, LightGBM algorithm grows vertically meaning it grows leaf-wise and other algorithms grow level-wise. LightGBM chooses the leaf with large loss to grow. It can lower down more loss than a level wise algorithm when growing the same leaf.

Why is LightGBM popular?

It has become difficult for the traditional algorithms to give results fast, as the size of the data is increasing rapidly day by day. LightGBM is called “Light” because of its computation power and giving results faster. It takes less memory to run and is able to deal with large amounts of data. Most widely used algorithm in Hackathons because the motive of the algorithm is to get good accuracy of results and also brace GPU leaning.

When to use LightGBM?

LightGBM is not for a small volume of datasets. It can easily overfit small data due to its sensitivity. It can be used for data having more than 10,000+ rows. There is no fixed threshold that helps in deciding the usage of LightGBM. It can be used for large volumes of data especially when one needs to achieve a high accuracy.

What are LightGBM Parameters?

It is very important to get familiar with basic parameters of an algorithm that you are using. LightGBM has more than 100 parameters that are given in the documentation of LightGBM, but there is no need to study all of them. Let us see what are such different parameters.

Control Parameters

-

Max depth: It gives the depth of the tree and also controls the overfitting of the model. If you feel your model is getting overfitted lower down the max depth.

-

Min_data_in_leaf: Leaf minimum number of records also used for controlling overfitting of the model.

-

Feature_fraction: It decides the randomly chosen parameter in every iteration for building trees. If it is 0.7 then it means 70% of the parameter would be used.

-

Bagging_fraction: It checks for the data fraction that will be used in every iteration. Often, used to increase the training speed and avoid overfitting.

-

Early_stopping_round: If the metric of the validation data does show any improvement in last early_stopping_round rounds. It will lower the imprudent iterations.

-

Lambda: It states regularization. Its values range from 0 to 1.

-

Min_gain_to_split: Used to control the number of splits in the tree.

Core Parameters

-

Task: It tells about the task that is to be performed on the data. It can either train on the data or prediction on the data.

-

Application: This parameter specifies whether to do regression or classification. LightGBM default parameter for application is regression.

-

Binary: It is used for binary classification.

-

Multiclass: It is used for multiclass classification problems.

-

Regression: It is used for doing regression.

-

-

Boosting: It specifies the algorithm type.

-

rf : Used for Random Forest.

-

Goss: Gradient-based One Side Sampling.

-

-

Num_boost_round: It tells about the boosting iterations.

-

Learning_rate: The role of learning rate is to power the magnitude of the changes in the approximate that gets updated from each tree’s output. It has values : 0.1,0.001,0.003.

-

Num_leaves: It gives the total number of leaves that would be present in a full tree, default value: 31

Metric Parameter

It takes care of the loss while building the model. Some of them are stated below for classification as well as regression.

-

Mae: Mean absolute error.

-

Mse: Mean squared error.

-

Binary_logloss: Binary Classification loss.

-

Multi_logloss: Multi Classification loss.

Parameter Tuning

Parameter Tuning is an important part that is usually done by data scientists to achieve a good accuracy, fast result and to deal with overfitting. Let us see quickly some of the parameter tuning you can do for better results.

-

num_leaves: This parameter is responsible for the complexity of the model. Its values should be ideally less than or equal to 2. If its value is more it would result in overfitting of the model.

-

Min_data_in_leaf: Assigning bigger value to this parameter can result in underfitting of the model. Giving it a value of 100 or 1000 is sufficient for a large dataset.

-

Max_depth: To limit the depth of the tree max_depth is used.

If you need to speed up the things faster:

-

Assign small values to max_bin.

-

Make use of bagging by bagging fraction and bagging frequency.

-

By setting feature_fraction use feature sub-sampling.

-

To speed up data loading in the future make use of save_binary.

If you want to good accuracy:

-

With a big value of num_iterations make use of small learning_rate.

-

Assign large values to max_bin.

-

Assign big value to num_leaves.

-

Your training data should be bigger in size.

-

Make use of categorical features directly.

If you want to deal with overfitting of the model

-

Assign small values to max_bin and num_leaves.

-

Make use of a large volume of training data.

-

Make use of max_depth so as to avoid deep trees.

-

Make use of bagging by setting bagging_fraction and bagging_freq.

-

By setting feature_fraction use feature sub-sampling.

-

Make use of l1 and l2 & min_gain_to_split to regularization.

Conclusion

LightGBM is considered to be a really fast algorithm and the most used algorithm in machine learning when it comes to getting fast and high accuracy results. There are more than 100+ number of parameters given in the LightGBM documentation.

In this blog, I have tried to give you the basic idea about the algorithm, different parameters that are used in the LightGBM algorithm. In the end I have discussed the parameter tuning to avoid overfitting, or speeding up the task and to achieve good accuracy.

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments

360digitmgsk

Oct 01, 2020I have to search sites with relevant information on given topic and provide them to teacher our opinion and the article. <a rel="nofollow" href="https://360digitmg.com/india/ethical-hacking-course-training-in-hyderbad">info</a>

williamjames7145fa18230399a7429d

Jun 19, 2023If you're looking for trendy fashion accessories in the UK, <a href="https://gethotdeals.co.uk/store/vooglam-uk">vooglam uk</a> is a great place to start your search. With their wide range of stylish bags, sunglasses, watches, and jewelry, you can find the perfect accessory to elevate your outfit.

williamjames7145fa18230399a7429d

Jun 19, 2023[url="https://gethotdeals.co.uk/deals/food-and-drink"]hot deals farm foods[/url]

williamjames7145fa18230399a7429d

Jun 19, 2023[qp jewellers discount code]code(https://gethotdeals.co.uk/store/qp-jewellers)

williamjames7145fa18230399a7429d

Jun 19, 2023https://gethotdeals.co.uk/store/qp-jewellers

kefaimachined949f88d15ea43ec

Dec 07, 2023Thank you very much for this amazing post. I would love to read more of your article.

kefaimachined949f88d15ea43ec

Dec 07, 2023Thanks for this amazing article. I think you should write an article about <a href=https://kefaimachine.com/automatic-filling-machine>automatic packaging machine manufacturer</a> this will be very helpful.