- Category

- >NLP

- >Python Programming

Word Embedding In NLP with Python Code Implementation

- Tanesh Balodi

- Dec 08, 2020

- Updated on: Dec 08, 2020

Introduction to Word Embeddings

When we talk about natural language processing, we are discussing the ability of a machine learning model to know the meaning of the text on its own and perform certain human-like functions like predicting the next word or sentence, writing an essay based on the given topic, or to know the sentiment behind the word or a paragraph.

Few techniques that we are aware of like Bag of Words are good for small scale data, but they lack in the extraction of meaningfulness of a sentence or paragraph, Also its prowess is limited as it does not care about the order of the words.

Well, we needed to find a solution that we could rely on, word embedding solves most of the problems, We will discuss the work as well as the implementation of Word embedding with python code.

Why Word Embeddings?

What makes word embedding different and powerful from other techniques is that it works on the limitations of other Bag of words and other techniques, Few points that makes word embedding better than others are-:

-

A better understanding of Words and Sentences than other techniques in NLP, also known as linguistic analysis.

-

Word Embedding reduces the dimensions of the dataset better than other NLP techniques and thus performs better.

-

Takes Less execution time or in other words, is faster in training than others as it doesn’t take a humongous amount of weights for training as others.

-

It does not follow the approach of the Sparse matrix, therefore makes it better for computational reasons.

Working of Word Embeddings

The idea behind any NLP technique is that our model becomes able to extract some meaning out of the text and perform certain tasks, also known as linguistic understanding, and that’s where embeddings come to show up.

Consider two words “cricket” and “football”, both words are more related to “field” and “game” than to “Animals” and “space”, to make our model understand such thing, we need to perform certain tasks.

Well, Embeddings are assigning some vectors to the particular word based on the number of times two words occurred in a corpus, or how many times they have occurred simultaneously.

Now, consider a corpus

“This is a nice place”

“ This place is nice”

“A nice place”

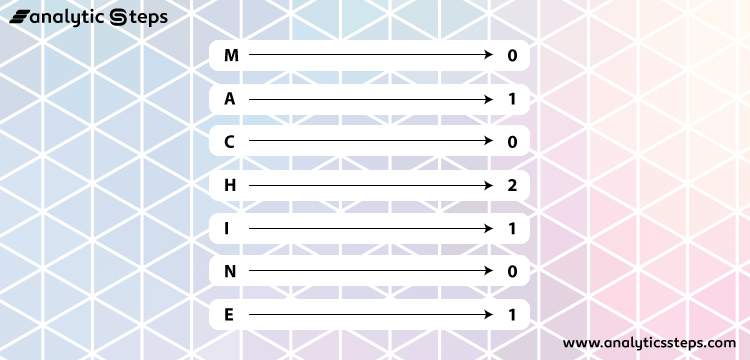

Here, words like “a nice” are coming simultaneously, whereas “This” and “is” is also in the same sentence, and no word is repeating itself, therefore Vector representation would be -:

Correlation Matrix Representation of the corpus

We have taken random vector numbers based on their number of coming together in a sentence, Now we can take any pair of vectors and look how close they are related to each other in vector space, so if initially, two pairs of vectors were apart from each other, Now we will make them closest to each other, thus showing the relationship between those two vectors. After some repetition of this step, we will reach an accurate vector space model. This is also known as Global vectors or Glove in NLP.

Vector Space Representation of different pairs of words

Above is an example of the representation of the relationship between two words in vector space, Dog and cat are closely related to each other than the pair of “no” and “bat”. It also represents the word embedding visualization in vector space.

We take input as an embedding in our machine learning model, which means, we take words and then convert them into numeric values as input to extract more meaning from that word as our machine understands numeric values.

Limitations of Word Embeddings

-

Cannot distinguish between homophones with the same spelling, for example, “rose”, rose is a flower as well as the past tense of “rise”.

-

More the dataset or corpus, the more training is required, however, the limitation lies in the significant growth in memory space, as you can conclude from example, the correlation matrix representation of three sentences took so much vector space.

-

You may see undesirable biases at the end if the training dataset is not properly pre-processed.

Implementation of Word embedding with python code

For the dataset, you may copy any large text as a corpus and paste it as a .txt file.

Step 1

Importing important libraries and initializing the dataset.

import numpy as np

from matplotlib import pyplot as plt

import re

from nltk.corpus import stopwords

import keras

from keras.layers import Dense, Activation, Input, Dropout

from keras.models import Model

f = open('../datasets/sherlock.txt')

text = f.read()

f.close()

Step 2

-

Pre-processing of data, using .lower() function to convert all uppercase string to lowercase string.

-

Using re.sub() function for substituting regular expressions

-

Removing Stopwords

data = text[3433:]

data = data.lower()

data = re.sub('[^A-Za-z]+', ' ', data)

data = data.split()

stop_words = stopwords.words('english')

data = [word for word in data if word not in stop_words] # To drastically reduce the size of XStep 3

Assigning unique data values to vocabulary

vocabulary, counts = np.unique(data, return_counts=True)

vocabulary.shape, counts.shape

Step 4

Implementing the one-hot vector encoding to preprocess categorical features in the machine learning model.

def get_one_hot_vector(word):

vec = np.zeros((vocabulary.shape[0], ))

index = (vocabulary == word).argmax()

vec[index] = 1

return vec

dataset = []

for word in data:

dataset.append(get_one_hot_vector(word))

dataset = np.asarray(dataset)

dataset.shapeStep 5

Assigning X and y for training and testing, and then splitting them.

X = np.zeros((dataset.shape[0]-1, dataset.shape[1]*2)) # Bigram

for i in range(X.shape[0]-1):

X[i] = np.hstack((dataset[i], dataset[i+1]))

print(X[0], X[0].shape, X.shape)y = dataset[1:]

X.shape, y.shape

((261744, 17512), (261744, 8756))

split = int(0.85 * X.shape[0])

X_train = X[:split]

X_test = X[split:]

y_train = y[:split]

y_test = y[split:]

X_train.shape, X_test.shape, y_train.shape, y_test.shape

Step 6

Implementing Word embedding using keras

embedding = 300

inp = Input(shape=(17512,))

emb = Dense(embedding, activation='tanh')(inp)

emb = Dropout(0.4)(emb)

out = Dense(8756, activation='softmax')(emb)

model = Model(inputs=inp, outputs=out)

model.summary()

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

encoder = Model(inputs=inp, outputs=emb)

hist = model.fit(X_train, y_train,

epochs=3,

shuffle=False,

batch_size=1024,

validation_data=(X_test, y_test))

We can see the loss is decreasing with each iteration and accuracy is increasing.

Step 7

Plotting the graph between loss and accuracy

plt.figure()

plt.plot(hist.history['loss'], 'b')

plt.plot(hist.history['val_loss'], 'g')

plt.show()

plt.figure()

plt.plot(hist.history['acc'], 'b')

plt.plot(hist.history['val_acc'], 'g')

plt.show()Plotting the graph between Loss and value loss

Plotting the graph between Loss and Accuracy

Step 8

Predicting Cosine similarities

a = encoder.predict(X)

w2v = {}

alpha = 0.9

for i in range(X.shape[0]):

try:

old_vec = w2v[vocabulary[y[i].argmax()]]

new_vec = alpha*old_vec + (1-alpha)*a[i] # Running Average

w2v[vocabulary[y[i].argmax()]] = new_vec

except:

w2v[vocabulary[y[i].argmax()]] = a[i]

print (len(w2v.keys()))

Output: 8756

def cosine_similarity(v1, v2):

return np.dot(v1, v2)/np.sqrt((v1**2).sum()*(v2**2).sum())

v1 = w2v['sherlock']

v2 = w2v['holmes']

print(cosine_similarity(v1, v2))

Output: 0.340144

Conclusion

We have now successfully learned the working of word embeddings, how is it different from a bag of words method, What are the pros and cons of using it, and at the end, we implemented Word embedding with the help of python code.

Although no approach in NLP is considered as optimum for every problem in NLP as it is a very large field that is yet to be explored, we have still gained a lot of insight in this field.

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments